The best-performing email marketing campaigns aren’t built on intuition — they’re built on data. A/B testing removes the guesswork by letting teams compare real audience behavior and optimize for higher engagement and revenue. Instead of relying on instincts or outdated assumptions, testing gives you clear signals on what actually moves the needle.

In this guide, you’ll learn which email elements make the biggest impact, how to run a simple 5-step testing process, and how to interpret your results with confidence. We’ll also cover how AI can streamline the entire workflow and how connecting your tests directly to CRM and sales data (like you can with monday campaigns) turns A/B testing into a predictable, repeatable growth engine.

Try monday campaignsKey takeaways

- Focus your A/B tests on high-impact elements like subject lines and call-to-action buttons first to drive the biggest improvements in opens, clicks, and revenue.

- Test one element at a time with at least 1,000 recipients per variation and wait for statistical significance before making decisions to avoid costly mistakes.

- Track your tests through the entire sales funnel, not just email metrics, to connect email performance to actual revenue and qualified leads generated.

- Make testing a continuous habit rather than occasional experiments, because each test builds on previous insights, creating compound improvements over time.

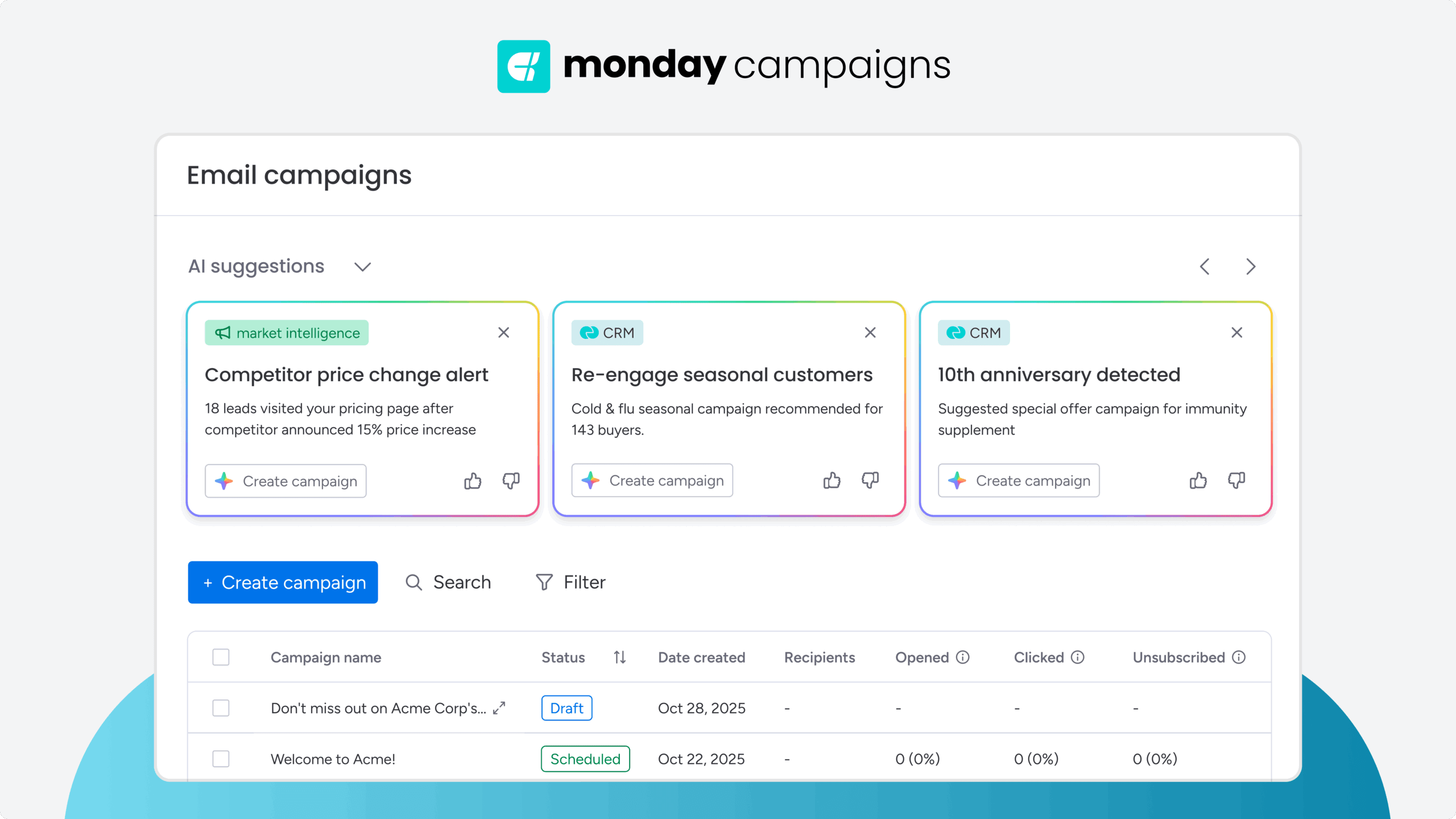

- Automate the entire testing process, and use AI to suggest what to test nextwith monday campaigns, plus with monday CRM integration see how each test impacts your bottom line.

What is email A/B testing?

Email A/B testing is sending 2 versions of an email to different segments of your audience to see which performs stronger. You create variations of a single element — like a subject line or button — and measure which one drives more opens, clicks, or sales.

The winning version goes to the rest of your list. This systematic approach replaces guesswork with data-backed decisions that directly impact revenue.

How split testing works for email campaigns

Split testing follows a straightforward process. You divide your email list into 2 random groups and send each group a different version of your email at the same time.

- Create 2 versions: change one element like the subject line or call-to-action

- Split your audience: Randomly divide recipients into equal groups

- Send simultaneously: Deliver both versions at the same time

- Measure results: Track which version achieves your goal

Key components of effective A/B tests

Every test starts with a hypothesis — a prediction about what will improve performance and why. You might hypothesize that adding personalization to subject lines will increase open rates because recipients respond to messages that feel tailored to them.

Statistical significance determines when results are reliable. This means having enough recipients and letting the test run long enough to rule out random chance. Most tests need at least 1,000 recipients per variation from your email list and should run for 3-7 days.

Acting only on statistically significant results prevents costly mistakes. monday campaigns calculates this automatically and alerts you when results are conclusive.

A/B testing vs. multivariate testing

A/B testing compares 2 versions of 1 element. Multivariate testing examines multiple elements at once — like testing different subject lines, images, and CTAs simultaneously.

A/B testing works best for most marketers because it’s simple and delivers straightforward, actionable insights. You need smaller audiences and get results faster. Multivariate testing requires much larger lists and takes longer but can reveal how different elements work together.

Why email A/B testing drives campaign success

Testing replaces assumptions with real audience data, helping teams make decisions that directly influence revenue. Every test reveals what actually motivates your audience to take action.

Boosts email marketing ROI with data-driven decisions

Data from testing shows exactly where to invest your budget. Instead of guessing which subject lines work, you know. Instead of wondering about send times, you have proof.

This precision reduces wasted spend and maximizes returns. Research shows that targeted promotions can yield a 1–2% sales lift when properly tested as part of an email marketing strategy.

Improves open rates and click-through performance

Small improvements compound into significant gains. A 2% increase in open rates might seem minor, but across thousands of emails within your email marketing strategy, it translates to hundreds more potential customers seeing your message.

- Open rates improve when you find subject lines that resonate

- Click-through rates rise with compelling CTAs and relevant content

- Conversion rates increase as you refine your value proposition

Essential email elements to A/B test

Not all email elements deliver equal impact. Some changes move the needle on revenue, while others barely register. The smartest marketers focus their testing energy where it matters most — on the elements that directly influence whether recipients open, click, and convert.

Here’s where to start:

Subject lines and preview text testing

Subject lines determine whether recipients even see your message. They’re your first and most important test opportunity. Preview text — the snippet that appears after the subject line — offers another chance to capture attention.

- Personalization: “Sarah, your exclusive offer” vs “Your exclusive offer”

- Urgency: “Last chance: Sale ends tonight” vs “Special sale this week”

- Questions: “Ready to save 30%?” vs “Save 30% today”

- Length: “Big news!” vs “Important updates about your account”

- Emojis: “🎉 Special announcement” vs “Special announcement”

Email copy and content variations

Your email content drives engagement after the open. Test different approaches to find what motivates action.

- Length: Brief, scannable messages vs detailed explanations

- Tone: Conversational and friendly vs professional and formal

- Personalization: Generic content vs dynamic content based on recipient data

- Value focus: Emphasizing savings vs highlighting benefits

Using AI, monday campaigns generates HTML email content variations that match your brand voice while testing different angles.

Call-to-action button optimization

CTAs directly drive conversions. Even small changes can significantly impact click-through rates and revenue.

- Button text: “Get started” vs “Start free trial” vs “Learn more”

- Color: High-contrast colors vs brand colors

- Size: Large, prominent buttons vs smaller, subtle ones

- Placement: Above the fold vs after content

- Quantity: Single focused CTA vs multiple options

Send time and day testing

Timing affects whether your email gets noticed or buried. Optimal send times vary by audience, industry, and even individual recipient behavior as part of your email cadence.

- Weekdays vs weekends

- Morning vs afternoon vs evening

- Time zones for geographically diverse lists

- Behavioral timing based on when individuals typically engage

With tools like monday campaigns, AI helps identify the most effective send times based on your audience’s engagement patterns.

From name and sender testing

Who the email comes from influences trust and open rates. Test different sender strategies to find what builds the strongest connection.

- Personal names: “Sarah from We Love Cats” for relationship building

- Company names: “We Love Cats” for brand recognition

- Department names: “We Love Cats Customer Success” for context

- Hybrid approaches: “Sarah at We Love Cats customer support” for balance

Visual design and layout tests

Design impacts readability and engagement, especially on mobile devices where most emails are read.

- Single-column vs multi-column layouts

- Image placement and size

- White space and padding

- Mobile responsiveness

How to run A/B tests for email campaigns in 5 steps

Running effective A/B tests doesn’t require complex tools or advanced statistics — just a clear process. These 5 steps take you from initial idea to actionable insights, with each stage building on the last:

Step 1: Create your testing hypothesis

Start with a clear prediction using this formula:

“If we [change X], then [result Y] will occur because [reason].”

Strong hypotheses connect to business goals. For exampe, “If we move our CTA above the fold, then click-through rates will increase because the primary action is immediately visible to readers.”

Step 2: Select one variable to test

Test one element at a time. Multiple changes muddy results and prevent clear insights. Choose high-impact elements first — subject lines and CTAs typically deliver the quickest wins.

Step 3: Calculate sample size and test duration

Reliable results require sufficient data. Most tests need at least 1,000 recipients per variation. Run tests for 3-7 days to account for daily behavior patterns.

Step 4: Split your email list properly

Random assignment prevents bias. Both groups should have similar characteristics — mixing new subscribers with long-time customers skews results. The platform handles list splitting automatically while maintaining segment integrity.

Step 5: Launch and track your test

Monitor performance without making changes mid-test. Document your setup for future reference. Real-time dashboards in monday campaigns show how each variation performs, making it easy to identify winners and understand why they succeeded.

7 A/B testing best practices for email marketing

Not every test deserves equal attention. The difference between effective testing and wasted effort comes down to focus — running the right tests in the right order. These 7 practices separate teams that see incremental gains from those that achieve breakthrough performance:

- Prioritize high-impact test elements: Focus where you’ll see the biggest returns. Subject lines and CTAs typically deliver more value than sender name or footer design tests.

- Make testing a continuous habit: Make testing a habit. Each test builds on previous insights, creating compound improvements over time.

- Ensure statistical significance before acting: Wait for conclusive results. Acting too early on trends leads to poor decisions and wasted resources.

- Document every test result: Build institutional knowledge. Track what you tested, why, and what you learned for future reference.

- Segment tests by audience type: Different groups respond differently. What works for new subscribers might fail with loyal customers.

- Test across campaign types: Apply testing beyond promotional emails as part of your broader email marketing strategy. Welcome sequences, newsletters, and transactional emails all benefit from optimization.

- Apply insights to future campaigns: Scale winning elements across your email program. monday campaigns helps you save successful variations as templates for future use.

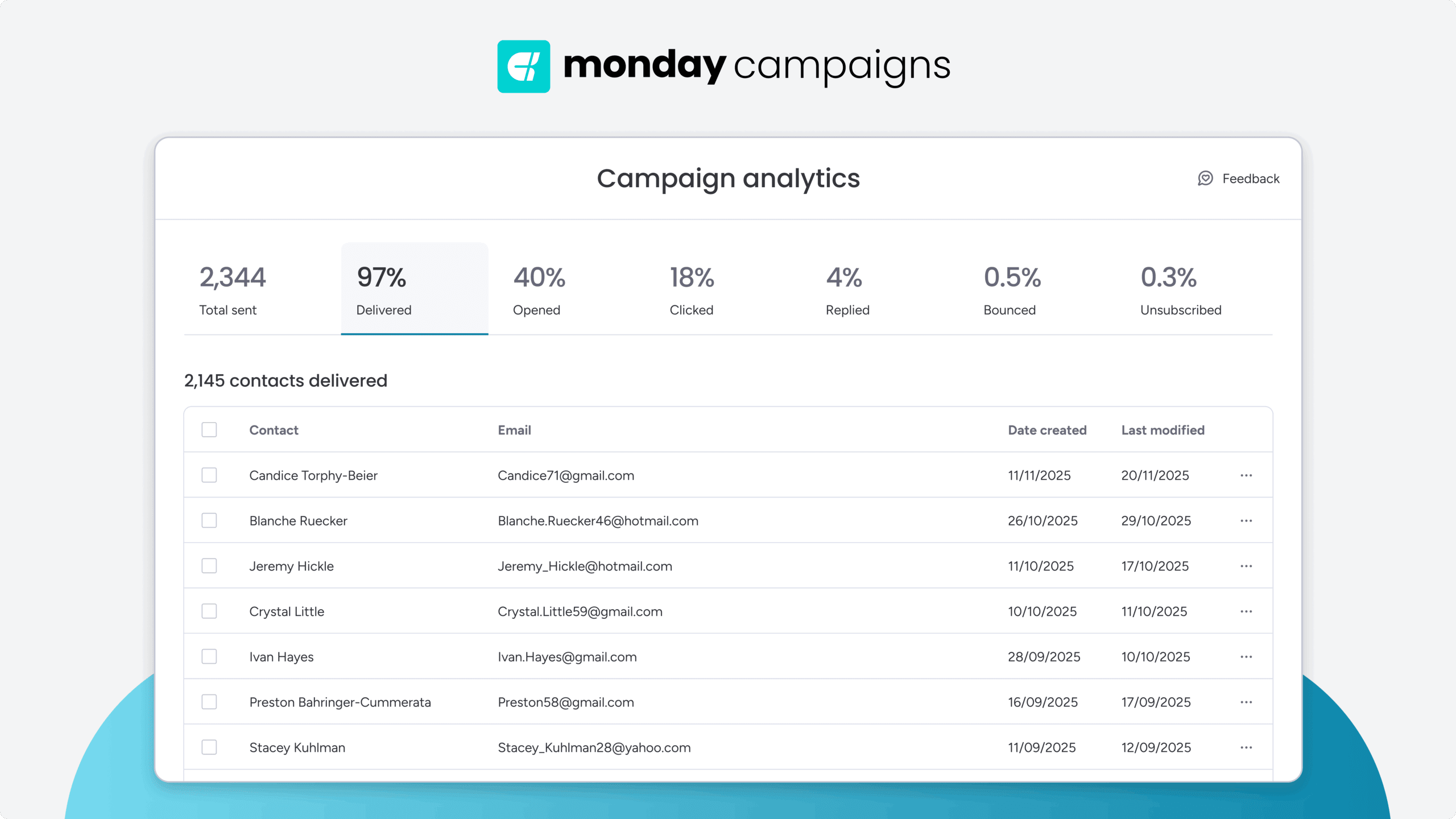

How to analyze A/B test results (and translate them into revenue)

Running a test is the easy part — knowing what the results actually mean is where teams often go off-track. A variation with a slightly higher click rate might look like a win, but without the right analysis, you risk acting on noise instead of meaningful signal. Here’s how to read your data correctly and turn every test into measurable revenue growth.

Read results with statistical confidence

Statistical significance tells you whether your winning variation is real or just random fluctuation. Aim for a 95% confidence level so you’re not making decisions based on chance. Tools like monday campaigns calculate significance automatically and wait to declare a winner until the data is conclusive — removing the guesswork entirely.

Track the metrics that actually matter

Not all metrics carry equal weight. Focus on indicators that tie directly to revenue and customer intent:

- Click-through rate (CTR): Strong predictor of interest and mid-funnel movement

- Conversion rate: The most direct signal of revenue impact

- Unsubscribe rate: Highlights message or audience mismatch

Open rates still matter for deliverability, but they rarely tell the full performance story.

Avoid common A/B testing mistakes

Most underperforming test programs fail because of avoidable errors:

- Testing too many variables: Keeps you from knowing what changed the outcome

- Stopping tests early: Early results often flip as more data comes in

- Ignoring audience segmentation: Different groups respond to different messages

- Chasing vanity metrics: High opens mean nothing without downstream action

- Testing inconsistently: Sporadic testing slows learning and optimization

A disciplined approach builds a compounding advantage over time.

Turn insights into better-performing campaigns

Once a variation wins, apply what you learned immediately. Update templates, refine sending strategies, and use your results to shape the next round of tests. Use AI-powered tools that learn from each test and automatically recommends similar optimizations — helping you optimize faster with less manual work

Connect A/B tests directly to revenue

Email performance doesn’t end at the click. Track how each variation influences the full customer journey:

- Follow recipients from email → landing page → deal → purchase

- Compare variations based on qualified leads and closed revenue

- Optimize for downstream actions, not just inbox-level metrics

Because monday campaigns is built into monday CRM, every test is tied to sales data, giving you a complete, end-to-end view of which email variations actually generate revenue.

Try monday campaignsAccelerate email testing success with monday campaigns

Email A/B testing becomes faster, easier, and more impactful when it lives inside your CRM instead of across scattered tools. With monday campaigns built directly into monday CRM, testing shifts from a manual, time-consuming process to a streamlined workflow where variations, segments, and results all sit in one connected system.

AI that guides what to test and why

AI analyzes historical performance and surfaces high-impact testing opportunities so you’re never guessing what to optimize next. Insights are tailored to your audience and goals, and recommendations get smarter over time as the platform learns from every campaign you send.

Revenue visibility through native CRM integration

Most testing stops at opens and clicks; this platform connects results all the way through your sales pipeline. You can see which variation generated qualified leads, influenced opportunities, or contributed to closed revenue — giving teams a complete, end-to-end view of business impact. Marketing and sales finally operate from the same source of truth, making it easier to prioritize tests that drive meaningful growth.

Take a smarter approach to A/B testing email campaigns

Email A/B testing isn’t about running endless experiments — it’s about building a system that consistently delivers better results. Every test you run teaches you something new about your audience, and those insights compound into predictable revenue growth over time.

The difference between teams that see marginal gains and those that achieve meaningful, measurable improvement comes down to having the right infrastructure. When your testing lives inside your CRM, connected directly to sales data and guided by AI recommendations, optimization becomes part of your natural workflow instead of a separate project.

Remove the friction from testing with monday campaigns so you can focus on what matters: understanding your audience and driving revenue. Start with one simple test today — a subject line variation or a CTA change — and watch how small, data-backed improvements create momentum that transforms your entire email program.

Try monday campaignsFAQs

What is an A/B test for email campaigns?

An AB test for email campaigns is a method that measures engagement for different versions of the same email with a sample of your recipients. You create 2 variations of 1 element, send them to separate groups, and the best performing version goes to the rest of your list.

What is the most effective email marketing campaign strategy A/B testing?

The most effective A/B testing strategy focuses on high-impact elements like subject lines and CTAs first. Test continuously rather than sporadically, ensure statistical significance before acting, and always connect results to revenue metrics rather than vanity metrics.

How long should email A/B tests run?

Email A/B tests should run for 3-7 days or until reaching statistical significance. This duration accounts for different daily engagement patterns and ensures reliable results that aren't skewed by single-day anomalies.

What sample size do I need for email A/B tests?

Most email A/B tests need at least 1,000 recipients per variation for reliable results. Larger sample sizes increase confidence in results, and the exact requirement depends on your expected performance difference and desired confidence level.

Can I A/B test automated email sequences?

Yes, automated email sequences benefit greatly from A/B testing. Test individual emails within sequences or compare entire sequence approaches, as improvements compound over time with automated campaigns.

Which email metrics predict revenue best?

Click-through rate and conversion rate are the strongest revenue predictors. While open rates indicate initial engagement, clicks and conversions directly connect to purchase behavior and bottom-line impact.